Understanding List Crawling: Your Gateway to Structured Data

List crawling is a specialized form of web scraping that focuses on extracting collections of similar items from websites – like product catalogs, article listings, or directory pages. Unlike general web scraping that targets various elements across different pages, list crawling systematically processes structured data from lists using specific techniques for handling pagination, infinite scrolling, and nested structures.

Key aspects of list crawling:

- Purpose: Extract structured data from web lists (products, articles, directories)

- Process: Start with seed URLs → Follow pagination → Parse list items → Store data

- Tools: Python libraries (Scrapy, BeautifulSoup, Requests), headless browsers

- Output: Structured formats like CSV, JSON, or databases

- Applications: Price monitoring, competitor research, trend analysis, SEO insights

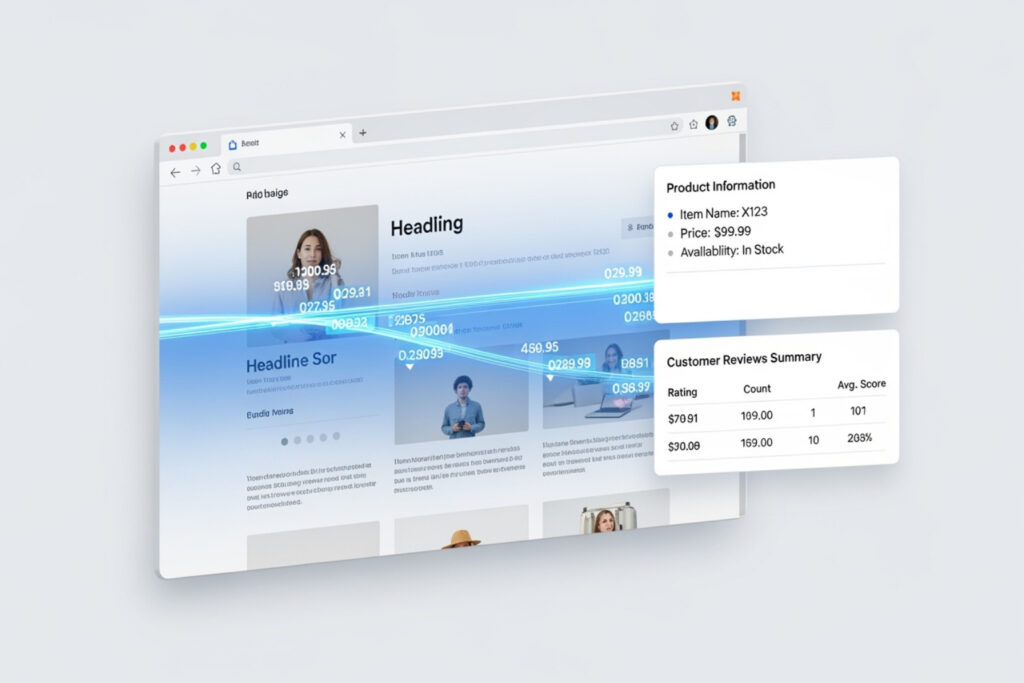

For the beauty and wellness industry, list crawling opens powerful opportunities. Imagine automatically tracking clean beauty product launches across multiple retailers, monitoring ingredient trends in skincare formulations, or analyzing customer reviews for sustainable packaging preferences. This data-driven approach aligns perfectly with the transparency and education that conscious consumers demand.

The process works by preparing a list of target URLs, configuring crawler rules to identify list patterns, and systematically extracting relevant information while respecting website policies and server resources. Modern crawlers can handle complex scenarios like JavaScript-heavy sites and dynamic content loading.

Understanding how search engines like Google crawl and index content also helps optimize your own website’s visibility – making list crawling knowledge valuable for both data collection and SEO strategy.

List crawling terms to know:

The Fundamentals of Web Crawling

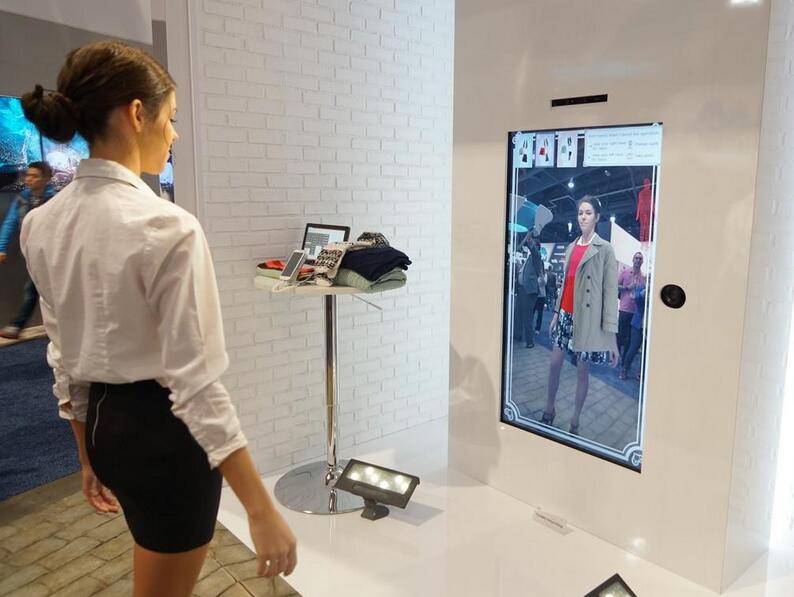

Think of web crawling as the internet’s version of a curious explorer with an endless appetite for findy. Just like that friend who can’t resist following every interesting path on a hiking trail, a web crawler systematically browses the World Wide Web, mapping out its vast digital landscape one page at a time.

A web crawler (also called a spider or bot) is essentially a computer program that automatically visits websites and reads their content. These digital explorers start their journey from what we call seed URLs – think of them as the front doors to the internet neighborhoods they want to explore. From these starting points, crawlers follow links like breadcrumbs, finding new pages and adding them to their ever-growing list of places to visit.

Now, here’s where things get interesting for list crawling specifically. While regular web crawling might wander around aimlessly, list crawling has a mission: it’s hunting for structured collections of similar items. Whether that’s a beauty retailer’s product catalog or a wellness blog’s article archive, these focused crawlers know exactly what they’re looking for.

The difference between web crawling and web scraping often confuses people, but it’s actually quite simple. Web crawling is like window shopping – you’re browsing and finding what’s available. Web scraping is like actually going into the store and buying specific items. The crawler finds the pages, while the scraper extracts the exact data you need from those pages.

Search engine bots like Googlebot are probably the most famous crawlers out there. They’re the reason you can type “best clean beauty products” into Google and actually get relevant results instead of digital chaos. These tireless workers ensure the internet stays organized and searchable.

Once your list crawling adventure is complete, all that beautiful data needs somewhere to live. The most common structured data formats include CSV files (great for spreadsheets), JSON (perfect for web applications), or direct storage in databases like MySQL or MongoDB. This organized approach makes your collected data ready for analysis and integration into other systems.

For a deeper dive into how these digital explorers steer the web, check out our comprehensive guide on Understanding Website Crawling: The Foundation of SEO.

The Core Process of List Crawling

List crawling follows a beautifully systematic approach that turns the overwhelming vastness of the internet into manageable, useful data. It’s like having a personal research assistant who never gets tired and always follows your exact instructions.

The journey begins with URL list preparation – essentially creating your crawler’s roadmap. You might manually compile a list of beauty retailers, wellness blogs, or ingredient databases, or use automated tools to find relevant starting points. For instance, if you’re researching emerging clean beauty trends, your seed URLs might include major online beauty destinations and independent wellness sites.

Next comes crawler configuration, where you teach your digital assistant exactly what to look for. This involves setting up rules that help the crawler recognize patterns in lists – whether that’s product grids, article archives, or directory pages. You’re essentially programming it to understand what constitutes valuable information versus digital noise.

When you run the crawl, your configured crawler springs into action, systematically visiting each URL and following your predefined rules. It gracefully handles website features like pagination (those “next page” buttons) and infinite scrolling (where content loads as you scroll down). This is where the magic happens – your crawler steers complex website structures while staying focused on its mission.

The data parsing and extraction phase is where your crawler becomes a detective, examining each page’s HTML code to locate the specific information you’re after. It might extract product names, ingredient lists, prices, or customer reviews – whatever you’ve trained it to recognize. This targeted approach ensures you collect only the relevant gems from potentially massive amounts of web content.

Finally, data analysis and storage transforms your raw findings into actionable insights. The extracted information gets organized into structured formats ready for analysis. You might find trending ingredients in skincare formulations, compare product prices across vendors, or identify emerging wellness topics that resonate with your audience.

How Crawled Data Powers SEO

List crawling isn’t just about collecting data – it’s a secret weapon for boosting your website’s search engine performance. Understanding how crawlers work gives you insider knowledge on making your content more findable and engaging.

Indexing and ranking form the foundation of search engine visibility. When search engine crawlers visit your website, they’re deciding whether your content deserves a spot in their massive index. Well-structured, easily crawlable content gets indexed faster and ranks better for relevant searches. If crawlers can’t access or understand your pages, your content might as well be invisible to potential visitors searching for beauty and wellness insights.

Crawlability determines how easily search engines can steer and understand your website. Poor crawlability – caused by broken links, blocked pages, or confusing site structure – frustrates both search engines and visitors. By applying list crawling techniques to audit your own site, you can identify and fix these issues, ensuring all your valuable content gets the visibility it deserves.

Backlink analysis reveals the digital connections that boost your authority. List crawling helps you find who’s linking to your content, analyze what makes certain pages link-worthy, and identify opportunities for building valuable relationships with other websites in the beauty and wellness space.

Competitor monitoring keeps you ahead of industry trends without the manual legwork. List crawling allows you to systematically track what topics your industry peers are covering, what products they’re featuring, and how they’re structuring their content. This intelligence helps you identify content gaps and opportunities in the beauty and wellness landscape.

SERP tracking and keyword research become much more manageable with list crawling. You can monitor how your content ranks for important beauty and wellness terms, track seasonal trends in search behavior, and identify emerging keywords that align with your audience’s interests. For more insights on this topic, explore The Role of Keywords in SEO and Content Creation.

The beauty of list crawling for SEO lies in its systematic approach – instead of guessing what works, you’re making data-driven decisions that help your content reach the people who need it most.

Essential Tools and Techniques for Effective List Crawling

Getting started with list crawling is like learning to cook – you need the right ingredients and proven techniques to create something delicious. In our case, we’re cooking up valuable data insights that can transform your beauty and wellness business.

Python has become the go-to language for web crawling, and for good reason. It’s like having a Swiss Army knife for data extraction – versatile, reliable, and surprisingly easy to use. The beauty of Python lies in its extensive collection of open-source libraries that handle the heavy lifting for us.

Think of performance optimization as tuning a musical instrument – everything needs to work in harmony. When we talk about performance optimization, we’re really talking about being respectful guests on the internet. We don’t want to overwhelm websites with too many requests at once, which could slow them down for other users.

Parallel crawling is like having multiple assistants working on different tasks simultaneously. Instead of visiting one website at a time, we can have several crawlers working together, dramatically reducing the time needed to gather data. This is especially valuable when you’re tracking seasonal beauty trends or monitoring price changes across multiple retailers.

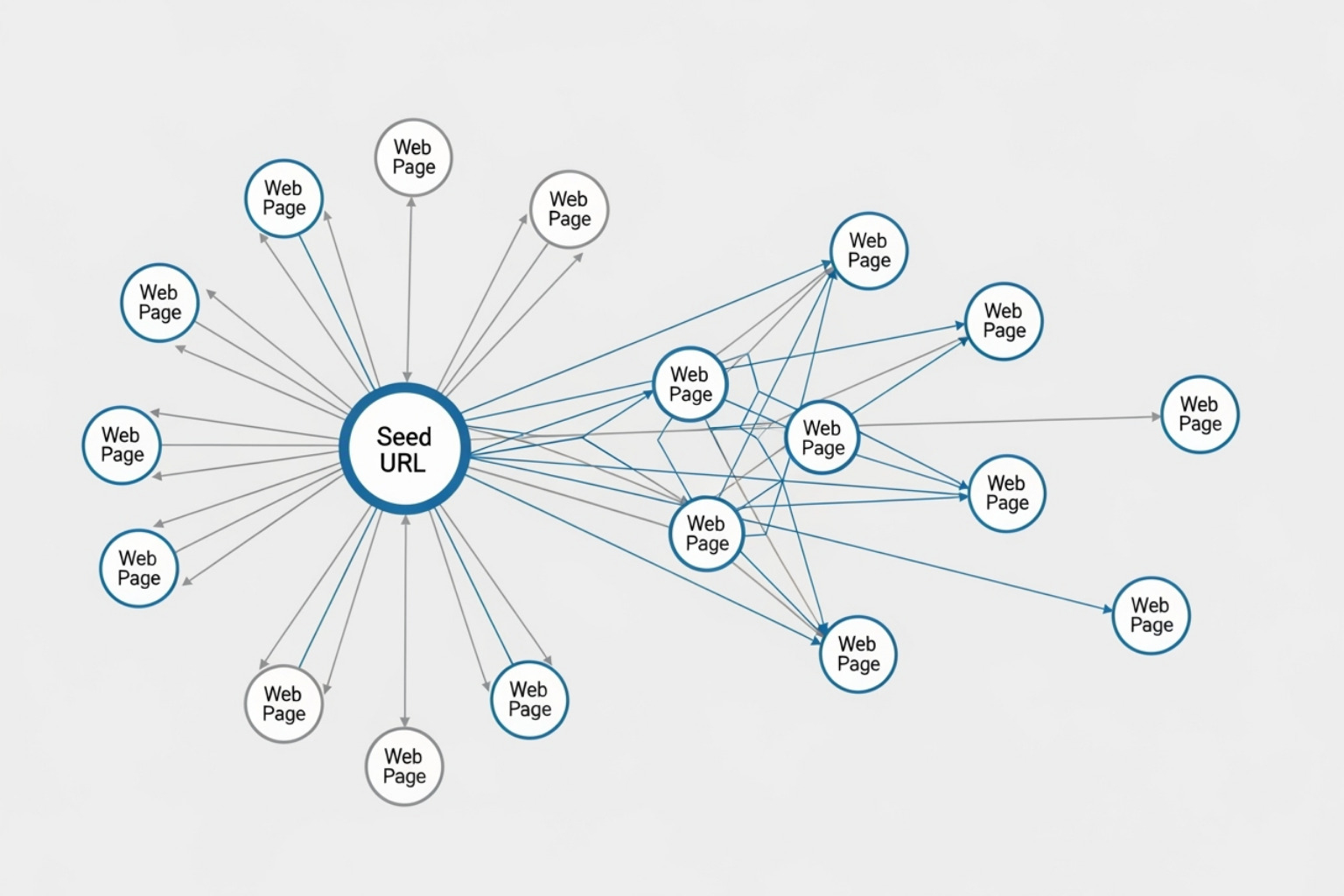

Modern websites often rely heavily on JavaScript to create those smooth, interactive experiences we love. This is where headless browsers come into play. These invisible browsers can execute JavaScript just like a regular browser, but without the visual interface. They’re perfect for capturing data from those dynamic product galleries or infinite-scroll ingredient lists that traditional crawlers might miss.

For those looking to ensure their own websites are crawler-friendly and optimized for search engines, our guide on Technical SEO: Ensuring Your Website is Crawlable and Indexable offers valuable insights.

Key Libraries for Python-Based Crawlers

Building effective list crawling solutions feels much easier when you have the right tools in your toolkit. Let’s explore the essential Python libraries that make data extraction both powerful and accessible.

The Requests library serves as our digital messenger, fetching web pages with simple, neat commands. It handles the technical details of making HTTP requests, allowing us to focus on what matters most – the data itself. Think of it as your reliable friend who always brings back exactly what you asked for.

Once we have that raw HTML content, BeautifulSoup becomes our detective, carefully examining the page structure to find exactly what we’re looking for. Whether you’re extracting product names, ingredient lists, or customer review scores, BeautifulSoup makes it feel almost intuitive. It’s particularly helpful when you’re analyzing clean beauty product descriptions or comparing wellness supplement ingredients across different brands.

For larger, more complex projects, the Scrapy framework steps in as our project manager. This comprehensive framework handles many of the behind-the-scenes complexities, from managing multiple requests simultaneously to processing data through organized pipelines. It’s like having an experienced team leader who knows how to coordinate everything smoothly.

When websites get fancy with JavaScript and dynamic content, Playwright and Selenium become our special agents. These browser automation tools can actually control a real web browser, clicking buttons, scrolling through pages, and waiting for content to load – just like a human user would. This capability is crucial when you’re trying to capture data from those beautiful, interactive product catalogs or customer review sections that load as you scroll.

Handling Dynamic and JavaScript-Heavy Websites

The modern web is full of interactive, dynamic content that creates engaging user experiences. However, this same technology that makes websites beautiful can also make list crawling more challenging – and more interesting.

JavaScript rendering is essential when websites use code to fetch and display content after the initial page loads. Many beauty and wellness sites use this approach to create smooth, app-like experiences. Our crawlers need to be patient, waiting for this JavaScript to execute and reveal the hidden content, much like waiting for a face mask to work its magic.

Dynamic content and AJAX technologies allow websites to update specific sections without refreshing the entire page. You’ve probably experienced this when filtering products by price range or skin type – the results update instantly without a page reload. This creates a seamless user experience but requires our crawlers to simulate these same interactions.

Infinite scroll has become increasingly popular, especially for product listings and social media feeds. Instead of traditional page numbers, more content appears automatically as you scroll down. For list crawling, this means our tools need to simulate scrolling behavior and patiently wait for new content to appear – like slowly revealing layers of a complex skincare routine.

The solution often involves using headless browsers controlled by libraries like Selenium or Playwright. These tools launch actual browser instances that can execute JavaScript and interact with pages just like human users. Google provides helpful resources on this approach, including guidance on Using Puppeteer to extract data.

Sometimes, we can take a more direct approach through API reverse-engineering. Many dynamic websites load their data from hidden API endpoints. By identifying these direct data sources, we can often bypass the need for browser automation entirely, making our list crawling faster and more efficient – like finding a shortcut to your favorite hiking trail.

Overcoming Challenges and Ensuring Ethical Compliance

List crawling can feel like navigating a digital obstacle course sometimes. Websites don’t always roll out the red carpet for crawlers, and honestly, that’s completely understandable. They need to protect their data and ensure their servers don’t get overwhelmed. As responsible data collectors, we need to work within these boundaries while staying on the right side of the law.

Think of rate limiting as a website’s way of saying “slow down there, partner.” Many sites will only allow a certain number of requests from your IP address within a specific timeframe. Cross that line, and you might find yourself temporarily locked out or, in worst cases, permanently blocked. It’s like being asked to leave a store for browsing too aggressively.

IP blocking takes this a step further. If a website’s security systems detect what they consider suspicious activity – maybe too many rapid-fire requests or unusual browsing patterns – they might block your entire IP address. This is their digital equivalent of putting up a “no entry” sign specifically for you.

Then there are CAPTCHAs – those little puzzles asking you to identify traffic lights or type squiggly letters. While they can be frustrating for humans, they’re designed to stop automated bots in their tracks. Some advanced crawlers can handle these, but it adds complexity to the whole process.

Building robust error handling into your crawler is absolutely essential. Websites aren’t perfect – they have broken links, unexpected page changes, and occasional server hiccups. Smart crawlers implement retry mechanisms with something called exponential backoff. This means if a request fails, the crawler waits a bit before trying again, and if it fails again, it waits even longer. It’s like being polite about knocking on a door that might not be answered right away.

The legal landscape around data collection has become increasingly important, especially with regulations like GDPR in Europe and CCPA in California. These laws protect people’s personal information, and any list crawling operation needs to respect these boundaries. When we’re extracting data about beauty products or wellness trends, we’re usually dealing with public information, but it’s always wise to stay informed about privacy requirements.

Managing Your Crawler’s Identity and Footprint

Every time your crawler visits a website, it’s essentially introducing itself. The user agent string is like a digital business card, telling the website what kind of browser or bot is making the request. Some websites automatically block requests from obviously automated sources, so managing this identity becomes crucial for successful list crawling.

Rotating user agents can make your crawler appear more human-like by mimicking different browsers and devices. You might alternate between appearing as Chrome on Windows, Safari on Mac, or even mobile browsers. Some crawlers go a step further by including contact information in their user agent string – it’s like leaving your phone number when you visit, just in case the website owner needs to reach out. You can learn more about how these identification strings work at More on user agent strings.

IP address rotation through proxy servers or VPNs is another key strategy. Instead of all your requests coming from the same location, they appear to originate from different places around the world. This distributes the load and makes it much harder for websites to identify and block your crawling operation. Think of it as having multiple postal addresses for your mail delivery.

Managing request frequency is perhaps the most important aspect of maintaining a low profile. Nobody likes that person who talks too fast and doesn’t pause for breath – websites feel the same way about overly aggressive crawlers. By implementing thoughtful delays between requests, your crawler appears more human-like and respectful of the website’s resources.

Best Practices for Ethical List Crawling

Being a good digital citizen starts with respecting robots.txt files. These simple text files are like the “house rules” that website owners post for crawlers. They specify which parts of their site are off-limits and which areas are welcome for exploration. Ignoring these guidelines isn’t just rude – it can lead to legal trouble and definitely goes against the spirit of ethical web crawling.

Many websites also provide Sitemap.xml files, which are like treasure maps showing you exactly where to find the content you’re looking for. These files list all the URLs that the website owner wants search engines and other crawlers to find. For list crawling projects focused on beauty and wellness content, these sitemaps can be incredibly valuable starting points.

Server load consideration is about being mindful of the impact your crawler has on the websites you visit. Every request uses some of their server resources, and too many simultaneous requests can slow down the site for regular visitors. It’s like being considerate about not hogging the bathroom when there’s a long line – everyone deserves their turn.

Data privacy has become increasingly important in our connected world. When conducting list crawling for beauty and wellness research, we need to be especially careful about any personal information that might be included in reviews, user profiles, or customer data. The goal is to gather insights about trends, products, and public information while respecting individual privacy rights.

Providing contact information in your crawler’s user agent or through other means creates transparency and accountability. Website administrators appreciate being able to reach out if they have questions or concerns about your crawling activity. It’s the difference between being an anonymous visitor and being someone who’s willing to have a conversation.

This ethical approach to list crawling aligns perfectly with the transparency and responsibility that conscious consumers expect from brands in the beauty and wellness space. Just as we value clean ingredients and honest marketing, we should apply the same principles to how we gather and use data. For more insights on creating positive digital experiences, explore our guide on User Experience (UX) and Its Impact on SEO.

Frequently Asked Questions about Web Crawlers

When diving into list crawling, many people wonder about the different types of crawlers they might encounter online. It’s a bit like understanding the various visitors that might come to your digital doorstep – some are helpful, others are neutral, and a few might cause concern.

What are the different types of web crawlers?

The internet is busy with different types of web crawlers, each serving a unique purpose in our digital ecosystem. Think of them as different specialists, each with their own job to do.

Search engine crawlers are probably the most familiar faces in this crowd. Google’s Googlebot and Microsoft’s Bingbot are the heavy hitters here, working tirelessly to find and index web content so we can find it through search results. These crawlers are like dedicated librarians, cataloging every book (webpage) they can find. Together, Googlebot and Bingbot handle more than 60% of all legitimate bot traffic on the web.

SEO analytics crawlers serve a different purpose entirely. These specialized tools help SEO professionals and marketers understand their competitive landscape. AhrefsBot, for instance, visits an impressive 6 billion websites daily, making it the second most active crawler after Googlebot. These crawlers focus on gathering data for backlink analysis, competitor research, and site auditing – exactly the kind of intelligence that helps businesses make informed decisions about their online presence.

Commercial crawlers represent the custom-built solutions that businesses create for specific data collection needs. Whether it’s monitoring competitor prices across e-commerce platforms, tracking product availability, or gathering market intelligence, these crawlers serve targeted business objectives. Tools like Screaming Frog and Lumar have made commercial crawling more accessible to businesses of all sizes.

Social media bots might surprise you with their activity levels. When you share a link on Facebook or Twitter, specialized crawlers like Facebook Crawler or Twitterbot immediately visit that URL to create those helpful preview cards you see. Pinterest’s web crawler actually ranks as the 6th most active crawler globally, highlighting how much social platforms rely on automated content findy.

AI and LLM data crawlers represent the newest and fastest-growing category. As artificial intelligence models require vast amounts of text data for training, specialized crawlers have emerged to collect this information. OpenAI’s GPTBot, despite being relatively new, already generates almost a full percent of all legitimate bot traffic – a testament to the growing importance of AI in our digital landscape.

Here’s how some of the major players compare:

| Crawler | Purpose | Behavior & Management |

|---|---|---|

| Googlebot | Search engine indexing | Respects robots.txt, crawls regularly, focuses on fresh content and user experience signals |

| AhrefsBot | SEO analytics and backlink analysis | Very active crawler, can be blocked via robots.txt, used for competitive intelligence |

| GPTBot | AI training data collection | Newer crawler, can be controlled through robots.txt, focuses on text-heavy content |

How can you tell the difference between a good bot and a malicious one?

Distinguishing between helpful and harmful crawlers is crucial for website owners and anyone involved in list crawling. The good news is that legitimate crawlers usually follow predictable patterns and identify themselves clearly.

User agent verification is your first line of defense. Legitimate crawlers typically use clear, identifiable user agent strings that include contact information or official company names. For example, Googlebot’s user agent clearly identifies itself and provides a way to verify its authenticity through Google’s verification process.

IP address and DNS lookups provide additional verification layers. Legitimate crawlers usually operate from known IP ranges that can be verified through reverse DNS lookups. If a crawler claims to be Googlebot but originates from an unverified IP address, that’s a red flag.

Behavioral analysis often reveals the most telling differences. Good bots respect rate limits, follow robots.txt files, and maintain reasonable request patterns. Malicious crawlers, on the other hand, often exhibit abnormal request patterns – they might send requests too quickly, ignore robots.txt rules, or attempt to access restricted areas of websites repeatedly.

How does list crawling apply to the beauty and wellness industry?

For beauty and wellness businesses like ours at Beyond Beauty Lab, list crawling opens up fascinating possibilities for understanding industry trends and consumer preferences.

Ingredient analysis becomes incredibly powerful when you can systematically gather ingredient lists from hundreds of skincare products across multiple retailers. This data helps identify emerging trends in clean beauty formulations, track the adoption of new sustainable ingredients, or understand which ingredients are gaining popularity among conscious consumers.

Product trend spotting through list crawling allows brands to stay ahead of the curve. By monitoring new product launches across beauty retailers, analyzing product descriptions and marketing language, and tracking seasonal trends, beauty businesses can make more informed decisions about their own product development and marketing strategies.

Competitor price monitoring helps maintain competitive positioning in the beauty market. Understanding how similar products are priced across different channels provides valuable insights for pricing strategies and market positioning.

Consumer sentiment analysis through review and social media list crawling reveals authentic consumer opinions about products, ingredients, and brands. This feedback is invaluable for understanding what really matters to beauty consumers – whether they’re prioritizing sustainability, effectiveness, or specific ingredient preferences.

The beauty industry’s focus on transparency and education makes list crawling particularly valuable. When consumers demand detailed ingredient information and authentic reviews, having access to comprehensive, up-to-date data becomes a significant competitive advantage. This data-driven approach aligns perfectly with the educational mission we accept at Beyond Beauty Lab, helping us provide the most current and relevant insights to our community.

The Fundamentals of Web Crawling

We often talk about “crawling” in the context of the internet, but what does it really mean? At its heart, web crawling is the systematic process of browsing the World Wide Web. Think of it as a diligent librarian, constantly scanning and cataloging new books (web pages) to ensure everything is organized and findable.

A web crawler, also known as a spider or bot, is a computer program that automatically scans and systematically reads web pages to index them for search engines. These digital explorers begin their journey from a set of known URLs, called “seed URLs.” From these starting points, they carefully follow links embedded within the pages, find new content, and add those new URLs to a queue for further exploration.

It’s important to distinguish between web crawling and web scraping. While often used interchangeably, they’re not quite the same. Web crawling is actually a component of web scraping; the crawler logic finds URLs to be processed by the scraper code. Web scraping, on the other hand, involves the extraction of specific data from those web pages. So, the crawler identifies the pages, and the scraper extracts the information we need from them.

Search engine bots, like Googlebot and Bingbot, are the most prominent examples of web crawlers. They are crucial for ensuring that when you search for something online, you get relevant and up-to-date results. Without these tireless bots, the internet would be a chaotic, unindexed mess!

Once data is extracted during a list crawling operation, it needs to be stored in a usable format. Common structured data formats include CSV (Comma Separated Values), JSON (JavaScript Object Notation), or directly into databases like MySQL or MongoDB. This structured storage makes the data easy to analyze and integrate into other systems.

To dive deeper into how these digital explorers steer the web, we encourage you to read our guide on Understanding Website Crawling: The Foundation of SEO.

The Core Process of List Crawling

List crawling is a systematic process designed to gather specific, structured data efficiently. It’s not just about randomly browsing the web; it’s a targeted mission. Here are the fundamental steps involved:

-

URL List Preparation: The journey begins with identifying the websites or specific pages we want to crawl. This list of URLs, or “seed URLs,” can be prepared manually, or we can use automated tools to find relevant starting points. For example, if we’re researching new clean beauty products, our initial list might include major online retailers or beauty blogs.

-

Crawler Configuration: Once we have our target URLs, we configure our crawler. This step involves setting up rules that tell the crawler exactly what to look for and how to behave. We define filters to identify the specific information we want to extract—be it product names, prices, ingredient lists, customer reviews, or links to images. This is where we teach our bot to recognize the patterns of a “list” on a website.

-

Running the Crawl: With our configuration in place, we release our crawler. It visits each URL in our list, follows the rules we’ve set, and steers through pages, handling elements like pagination (the “next page” buttons) or infinite scrolling (where content loads as you scroll down). This is where the magic happens, as the crawler systematically gathers information.

-

Data Parsing and Extraction: As the crawler fetches web pages, it simultaneously parses the HTML content. Parsing means breaking down the web page’s code to locate the specific data points we’re interested in. Once identified, this data is extracted and prepared for storage. This step ensures we only collect the relevant pieces of information from potentially vast amounts of web content.

-

Data Analysis and Storage: After the data is extracted, it’s compiled and stored in a structured format, like a CSV file, a JSON document, or a database. This organization makes the data readily available for analysis, allowing us to derive insights, track trends, or make informed decisions. For instance, we could analyze gathered ingredient lists to spot emerging trends in skincare formulations or compare product prices across different vendors.

How Crawled Data Powers SEO

List crawling isn’t just for gathering raw data; it’s a powerful ally in the field of Search Engine Optimization (SEO). By understanding how crawlers work and leveraging their capabilities, we can significantly boost our website’s visibility and performance.

-

Indexing and Ranking: The fundamental purpose of search engine crawlers is to find new web pages and updates to existing ones. This process, known as crawling, allows search engines to add these pages to their vast index. Once indexed, these pages can then be ranked for relevant search queries. Without proper crawling, a website’s content may not appear in search results, making it harder for users to find. A well-crawled website ensures better visibility and improves rankings.

-

Crawlability: This refers to a search engine’s ability to access and steer a website’s pages. If a site has poor crawlability due to broken links, blocked pages, or a confusing structure, search engines may struggle to index it effectively. By using list crawling techniques on our own site, we can identify and fix these issues, ensuring all necessary pages are indexed and ranked correctly.

-

Backlink Analysis: In SEO, backlinks (links from other websites to ours) are a crucial signal of authority and trustworthiness. We can use list crawling to find backlinks to our website, analyze our competitors’ backlink profiles, and identify new opportunities for building valuable links. This insight helps us understand our position in the digital ecosystem.

-

Competitor Monitoring: Staying ahead means knowing what our competitors are doing. List crawling allows us to systematically monitor competitor websites, extracting data on their product offerings, pricing strategies, content updates, and even their SEO structure. This intelligence can inform our own strategies and help us adapt quickly to market changes.

-

Keyword Research and SERP Tracking: Understanding what people are searching for and how our content ranks is vital. We can leverage list crawling to perform extensive keyword research, identifying popular search terms relevant to beauty and wellness. Furthermore, we can track our Search Engine Results Page (SERP) rankings for specific keywords, even monitoring how they perform in different geographical locations for local SEO. For more on this, explore The Role of Keywords in SEO and Content Creation.

Essential Tools and Techniques for Effective List Crawling

To start on our list crawling journey, we need the right tools and techniques. Just as a chef needs quality ingredients and precise methods, we need robust software and smart strategies to extract data efficiently and reliably.

Python stands out as the language of choice for web crawling and scraping due to its simplicity, extensive libraries, and strong community support. With Python, we have access to a rich ecosystem of open-source libraries specifically designed for these tasks.

Optimizing crawler performance is paramount for efficiency and speed. We don’t want our crawler to take days to gather data that could be collected in hours. Key strategies include:

- Limiting request frequency: Sending too many requests too quickly can lead to our crawler being blocked or seen as malicious. We implement delays between requests to mimic human behavior, often just a few seconds.

- Running crawlers in parallel: For large-scale operations, we can run multiple crawling processes simultaneously. This parallelism significantly speeds up data collection by spreading the workload.

- Using headless browsers: Many modern websites rely heavily on JavaScript to load content dynamically. Traditional crawlers might miss this content. Headless browsers (browsers without a graphical user interface) can execute JavaScript, allowing our crawler to “see” and interact with the page just like a human user would.

For a deeper dive into making your website more accessible to crawlers and optimizing for search engines, check out our insights on Technical SEO: Ensuring Your Website is Crawlable and Indexable.

Key Libraries for Python-Based Crawlers

When it comes to building powerful list crawling solutions in Python, several libraries form the backbone of our toolkit:

-

Requests: This neat and simple HTTP library allows us to make web requests (GET, POST, etc.) to retrieve the raw HTML content of a web page. It’s our primary tool for fetching the data from the internet. You can learn more about it here: requests library.

-

BeautifulSoup: Once we have the HTML content, we need to parse it, meaning we need to steer its structure and extract specific elements. BeautifulSoup is a fantastic Python library for parsing HTML and XML documents. It allows us to pinpoint data using CSS selectors or XPath, making extraction straightforward. Find its capabilities at BeautifulSoup.

-

Scrapy Framework: For more complex, large-scale, and robust list crawling projects, Scrapy is often our go-to. It’s a comprehensive web scraping and crawling framework that provides a complete package for web crawling and scraping. Scrapy handles many of the complexities for us, such as managing requests asynchronously, handling cookies, and processing data pipelines. It’s the most popular Python web scraping and crawling framework, boasting close to 50k stars on GitHub.

-

Playwright and Selenium: These are browser automation libraries. While Requests and BeautifulSoup are great for static HTML, modern websites often load content dynamically using JavaScript. Playwright and Selenium can control a real web browser (in a “headless” or visible mode), allowing us to interact with web pages, click buttons, fill forms, and wait for dynamic content to load before scraping. This is crucial for handling JavaScript-heavy sites.

Handling Dynamic and JavaScript-Heavy Websites

The internet is a dynamic place, and many modern websites use JavaScript to load content, create interactive elements, and even display entire lists of items. This presents a challenge for traditional crawlers that only read the initial HTML.

-

JavaScript rendering: When a website uses JavaScript to fetch and display data after the initial page load, our crawler needs to be able to “render” that JavaScript. This means executing the code on the page to make the content visible, just like a regular web browser does.

-

Dynamic content and AJAX: Many sites use Asynchronous JavaScript and XML (AJAX) to fetch new data in the background without refreshing the entire page. This is common for infinite scrolls, search results that update without a page reload, or product listings that appear as you filter them. Our crawlers need to simulate these interactions.

-

Infinite scroll: This is a common pattern where more content loads automatically as you scroll down a page, rather than having traditional “next page” buttons. To crawl these lists, our tools need to simulate scrolling down the page and waiting for the new content to appear.

-

Tools for dynamic content: For this, we rely on headless browsers controlled by libraries like Selenium or Playwright. These tools launch a real browser instance (though often invisible to us), execute the JavaScript, and allow us to scrape the fully rendered page. Google also provides resources on this, such as Using Puppeteer to extract data.

-

API reverse-engineering: Sometimes, the dynamic content is loaded from a hidden API endpoint. Instead of using a full browser, we can sometimes “reverse-engineer” these API calls and make direct requests to them. This can be significantly faster and more efficient than browser automation, especially for endless lists, as it bypasses the need to render the entire page.

Overcoming Challenges and Ensuring Ethical Compliance

While list crawling is incredibly powerful, it’s not without its problems. Websites employ various anti-scraping measures to protect their data, and it’s our responsibility to steer these challenges ethically and legally.

Common anti-scraping measures include:

- Rate limiting: Websites may limit the number of requests from a single IP address within a certain timeframe. Exceeding this limit can lead to temporary or permanent blocks.

- IP blocking: If a website detects suspicious activity from an IP address (e.g., too many requests, unusual patterns), it might block that IP entirely.

- CAPTCHAs: These “Completely Automated Public Turing test to tell Computers and Humans Apart” are designed to prevent bots from accessing content. Solving CAPTCHAs programmatically can be complex.

Managing errors and exceptions is crucial for robust list crawling operations. Websites can have broken links, unexpected page layouts, or server issues. We implement strategies like:

- Retry mechanisms: If a request fails (e.g., due to a temporary network issue), we can configure our crawler to automatically retry the request a few times before giving up.

- Exponential backoff: When retrying, instead of immediately trying again, we increase the delay between retries. This gives the server time to recover and makes our crawler less aggressive.

- Logging errors: We carefully log any errors, warnings, or unexpected behavior. This helps us debug issues, identify problematic URLs, and refine our crawling logic.

Beyond technical challenges, we must always consider legal compliance, especially data privacy laws like GDPR (General Data Protection Regulation) in Europe and CCPA (California Consumer Privacy Act) in the United States. We ensure our list crawling activities respect these regulations, particularly when dealing with personal data.

Managing Your Crawler’s Identity and Footprint

To avoid detection and blocking, we carefully manage how our crawler presents itself and interacts with websites.

-

User agents: Every time a browser or bot visits a website, it sends a “user agent string” that identifies itself (e.g., “Googlebot” or a specific browser version). Websites may block scrapers that use generic or outdated user agents. We can rotate user agents, making our requests appear to come from different browsers or devices, or even set a custom, identifiable user agent that includes contact information. For more on this, see More on user agent strings.

-

IP address rotation: Since websites can block IP addresses, rotating our IP address is a key strategy. We achieve this by using proxy servers or VPNs. A proxy server acts as an intermediary, routing our requests through different IP addresses. This distributes our requests across many IPs, making it harder for a website to identify and block us as a single entity.

-

Request frequency: Being mindful of how quickly we send requests is critical. We implement delays between requests (e.g., a few seconds) to avoid overwhelming the website’s server. This makes our crawler appear more human-like and reduces the chances of triggering anti-bot measures.

Best Practices for Ethical List Crawling

Ethical considerations are just as important as technical prowess in list crawling. We believe in being good digital citizens and ensuring our activities benefit everyone.

-

Respecting robots.txt: Most websites have a

robots.txtfile, which is a set of rules that tells crawlers which parts of the site they are allowed or disallowed to access. We always check and respect these rules. Ignoringrobots.txtcan lead to legal issues and is generally considered bad practice. -

Sitemap.xml files: Websites often provide a

sitemap.xmlfile, which lists all the URLs on the site that the owner wants search engines to crawl. This can be a valuable resource for our list crawling efforts, as it provides a clear map of the site’s content. See Sitemap.xml files for more details. -

Server load: We’re always considerate of the target website’s server. Our goal is to extract data without negatively impacting their performance or causing downtime. We limit our request speed and avoid making too many concurrent requests to a single domain.

-

Data privacy: This is paramount. We ensure our list crawling adheres strictly to data privacy laws like GDPR and CCPA. We do not extract personal identifiable information without explicit consent and ensure any collected data is handled securely and responsibly.

-

Providing contact information: When setting our crawler’s user agent, we often include contact information (e.g., an email address). This allows website administrators to reach out to us if they have concerns about our crawling activity.

By following these best practices, we ensure our list crawling is not only effective but also responsible and sustainable. This aligns with our commitment to transparency and positive user experience, which you can read more about in User Experience (UX) and Its Impact on SEO.

Frequently Asked Questions about Web Crawlers

When diving into list crawling and web data extraction, people naturally have questions about the different crawlers they encounter online. Understanding these digital visitors helps us better appreciate how data flows across the internet and how we can work with (rather than against) these automated systems.

What are the different types of web crawlers?

The internet is busy with different types of crawlers, each serving unique purposes in our digital ecosystem. Think of them as specialized workers, each with their own job description and goals.

Search engine crawlers are probably the ones you’re most familiar with, even if you don’t realize it. Google’s Googlebot and Microsoft’s Bingbot are the heavy hitters here, working tirelessly to find and catalog web content so it appears in search results. Together, these two account for more than 60% of all legitimate bot traffic online. Without them, searching the web would be nearly impossible.

SEO analytics crawlers are the research assistants of the digital marketing world. These specialized bots help SEO professionals understand how websites perform, analyze competitor strategies, and identify opportunities for improvement. AhrefsBot, for instance, visits an impressive 6 billion websites daily, making it the second most active crawler after Googlebot. These tools are invaluable for anyone serious about improving their website’s visibility.

Commercial crawlers serve specific business needs, often custom-built for particular data extraction tasks. They might monitor product prices across e-commerce platforms, gather market intelligence, or collect comprehensive product catalogs for analysis. Tools like Screaming Frog help website owners audit their own sites for technical issues.

Social media bots work behind the scenes to make sharing content more engaging. When you paste a link on Facebook or Twitter, their crawlers quickly visit that URL to generate those helpful preview cards with titles, descriptions, and images. Pinterest’s crawler is particularly active, ranking sixth in overall web traffic among all bots.

The newest category, AI and LLM data crawlers, represents the future of web crawling. As artificial intelligence systems like large language models require massive amounts of text data for training, specialized crawlers have emerged to collect this information responsibly. OpenAI’s GPTBot, despite being relatively new, already generates nearly a full percent of all legitimate bot traffic.

Here’s how the major players compare:

| Crawler | Purpose | Behavior & Management |

|---|---|---|

| Googlebot | Search engine indexing | Respects robots.txt, crawls frequently, focuses on content quality |

| AhrefsBot | SEO analysis and backlink findy | Very active crawler, can be controlled via robots.txt |

| GPTBot | AI training data collection | Newer bot, respects standard blocking methods |

How can you tell the difference between a good bot and a malicious one?

Distinguishing between helpful crawlers and potentially harmful bots is crucial for website security and performance. Good bots typically follow established protocols and identify themselves clearly, while malicious ones often try to hide their true nature.

User agent verification is your first line of defense. Legitimate crawlers announce themselves with proper user agent strings that clearly identify who they are and why they’re visiting. For example, Googlebot uses a specific format that includes version information and contact details. You can verify these claims by checking the bot’s IP address against official lists provided by the companies.

IP address and DNS lookups provide another layer of verification. Google provides detailed instructions on verifying Googlebot’s identity through reverse DNS lookups. Legitimate crawlers typically come from verified IP ranges owned by their respective companies.

Behavioral analysis reveals a lot about a bot’s intentions. Good bots respect your website’s robots.txt file, maintain reasonable request frequencies, and don’t overwhelm your server. They also typically crawl during reasonable hours and follow link structures logically. Malicious bots, on the other hand, might ignore your crawling guidelines, make excessive requests in short timeframes, or exhibit other abnormal request patterns that suggest they’re trying to extract data aggressively or cause harm.

How does list crawling apply to the beauty and wellness industry?

The beauty and wellness industry is particularly rich with data opportunities that list crawling can open up. This field thrives on transparency, education, and staying current with trends – all areas where systematic data collection proves invaluable.

Ingredient analysis represents one of the most powerful applications. By crawling product listings across multiple retailers, beauty brands and consumers can track which ingredients are trending, identify potentially harmful components, and spot emerging clean beauty formulations. This data helps conscious consumers make informed choices while helping brands understand market demands.

Product trend spotting becomes much more sophisticated with list crawling. Instead of manually checking dozens of beauty websites, automated systems can track new product launches, monitor seasonal trends, and identify emerging categories like waterless skincare or refillable packaging options.

Competitor price monitoring helps both businesses and consumers stay informed about market dynamics. Beauty retailers can ensure competitive pricing while consumers can find the best deals on their favorite products. This transparency benefits everyone in the ecosystem.

Consumer sentiment analysis through review crawling provides deep insights into what people really think about products. By systematically collecting and analyzing customer reviews, brands can identify common concerns, celebrate popular features, and improve their formulations based on real user feedback.

The beauty industry’s focus on identifying emerging clean beauty trends particularly benefits from list crawling techniques. As consumers become more ingredient-conscious, tracking which clean formulations gain popularity, which certifications matter most, and how sustainable packaging evolves helps both brands and consumers steer this rapidly changing landscape.